Goal

Understand interaural cues.

Interaural Cues

We have an astonishing ability to locate a sound source even with our eyes closed. We do this through 3 key effects.

Level differences

Timing differences

Spectral differences

These differences or "cues" to our brain are called interaural cues with "inter" being latin for "between, among, within, into" and aural being latin "auralis" for "ear". A sound between the ears. The exception being spectral cues as these only require a single ear.

In general sound can be louder in one ear than the other, sound can hit one ear before another, and sound can be altered by a single ear depending on the direction it arrives from. The first two give us the ability to detect horizontal changes in sound and the last one gives us the ability to detect vertical changes in sound.

Interaural Intensity Differences (IID)

We can tell where a sound is based on if the sound is more intense in one ear than the other. Remember that in acoustics intensity means power over an area specifically, in the case of hearing, how much it shakes your ear drum and causes fluid in your cochlea to move. If one ear is moving more than the other then there is a good chance the sound is coming from the direction of the greater movement. This difference is called the interaural intensity difference or IID.

In audio IIDs can happen in various ways. The most prominent are perhaps stereo panning and equalization.

Stereo Panning

When we pan a sound the idea is to fool the brain into thinking the sound is coming from the left or the right by making the sound louder on the left or the right respectively. If the sound is the same in both ears then it must be directly ahead or behind us. If we have two audio channels that have our signal then we can do a few different things.

Play the same signal in both channels to give the impression it is directly in front.

Turn down the sound in one of the channels making the other one seem louder. Hence it seems to come from the louder direction.

Turn up the sound in one of the channels making the other one seem softer. Hence it seems to come from the louder direction.

In the simplest form of panning we take the audio we want left and pan it left and the audio we want right and pan it right. So if we turn up or down the left fader it will change the signal in the left speaker. Likewise if we turn up or down the right fader it will turn up or down the right signal. In this situation the levels are subtractive or additive based on how we use the faders.

There is an issue with such a simple approach. If the goal is that as we move the pan knobs the sound appears to move to the left or right we must also consider the sounds intensity as we change the levels of the left and right. Do we wish it to maintain its loudness as it is panned? If the wave is correlated and panned through both the left and right there will be a 6 dBSPL boost (in ideal conditions) when in the middle and it will loose this boost as it is panned one direction or the other making the middle louder than the other directions.

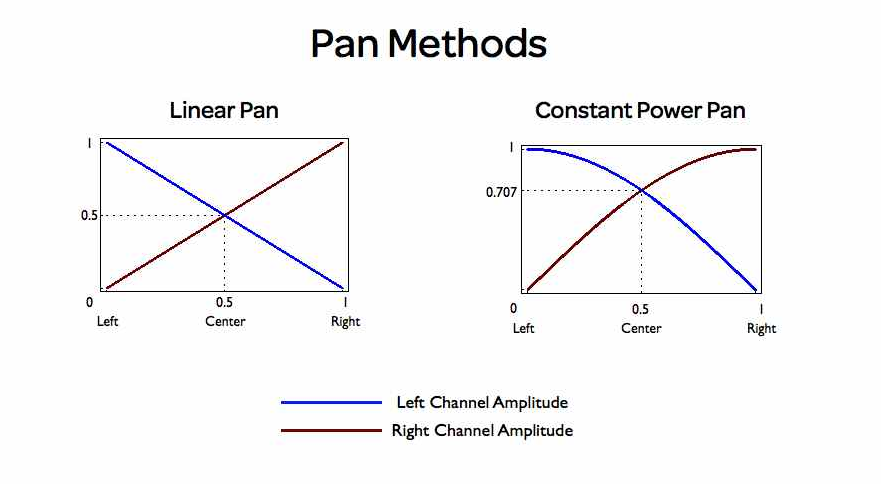

This introduces the concept of a panning law. The idea is as we pan from one direction to another we change the levels in booth channels in such a way as to give the impression it is equally loud in any given direction. This way we can place a sound in a mix and then use the fader to adjust its level. The most common pan laws are the circular pan law (constant power pan law), triangular pan law (linear pan law) and the 4.5 dB pan law. Some systems allow you to specify a specific level you may want to have such as Reaper.

The different levels for the various pan laws exist depending on the expected room. Most rooms and environments do not sum ideally hence they are closer to a 3dB boost and act as uncorrelated sound. The 4.5dB is a middle ground and the triangular pan law acts exactly as we would expect, having a boost in the middle with the sides sounding softer.

Warning about panning

How panning is implemented in a DAW or console however, can be very different and can have consequences. This is because there is another layer of complexity. We must also consider if we want the pan to work with the left and right channels separately or together. If we pan hard left should the right totally disappear? Or should some of the right make its way into the left channel? For example if the left is playing 2 notes and the right is playing 2 notes and we pan it, should we hear all 4 notes as we pan in a given direction? How should the balance change between them? What would best represent our stereo image?

Well, the responses to this question have lead to a lot of confusion online. Some DAW's choose to only support one or two pan laws and only support one type of left and right interaction while others have extensive options. DAW's that only support "left and right combined panning" limit mixing and as a result require plugins as a work around for panning done for technical rather than aesthetic reasons. In some cases they even limit their aesthetic possibilities.

Down to mono panning

In one corner you have the daws that add the left to the right channel as it gets panning. This can have terrible consequences if the channels are out of phase, because of this summation the signals will cancel out before it ever even comes out of our speakers! (Recall our discussion on ideal phasing and acoustic phasing.) This also has the added consequence that setting up mid-side routing with just the pan knobs is now impossible.

The plus side of such a system is now stereo effects are somewhat preserved regardless of pan. For example, if the left is playing 2 notes and the right is playing 2 notes we will still hear all 4 notes even when hard panned. If we were in a real space with instruments spaced on the left and right of us (just like hard panning would do) we will still hear all 4 notes so this makes some sense to do. Although there would be several other cues going on as well and they certainly wouldn't all sound the same level!

DAW's such as FL Studio and Renoise do this type of panning with no alternative to it and so it is important to be aware of this when mixing in such DAW's. Phase differences between the left and right channels can be particularly messy if you are panning them with the built in pan knobs which is usually what gives the magic of stereo its sound but sometimes can have obvious draw backs when summed to mono. For example, stereo micing that relies on panning that isn't hard left or right, or when there exists a correlated phase difference between the left and right. These cases will produce considerable phase issues and it is advisable to use a plugin that can keep the left and right separate when panning for such cases. (There are many free options.) This is also why FL Studio and Renoise provide a separate plugin to configure mid side as they cannot do it with their pan knobs because of the summation they perform.

Independent Panning

Then there are systems that keep the channels independent. DAW's like Reaper have extensive pan law options and give the user the ability to pick how they want to interact with the left and right when panning and even choose the crossfade. Logic is another example of a daw that allows users to pick how the right and left interact to create panning. If the left and right remain independent for panning then notes in only one channel can potentially disappear if hard panned away and possibly change balance a lot depending on how they are panned earlier in the chain if multiple pan stages occur. For example say you set up a group and then send it through an auxiliary channel. If you panned the group elements and then pan the aux channel the aux channel panning is working with the balance of the group you already have mixed and if you hard pan or aggressively pan the aux it may completely remove any heavily panned instruments in the group! This is why there are typically other options for panning available.

Pan law is separate from this notion of how left and right accomplish the pan law, but with it pointed out directly it should be more clear now that panning in either system is just a choice and you should have some idea why you may need another tool or mode in order to get the result you want. In most cases I am fine with the left and right being tied together as it yields what I expect in most situations and also in a way encourages a mono safe mix but if there are significant phase differences between channels I may explore using a tool that can pan with them separately while keeping in mind later possible panning stages if any.

Pan Laws

Pan laws boil down to 2 problems.

- How should the levels adjust between the left and right as the pan knob is moved?

- At what rate should this adjustment occur?

Circular pan law (also called the power pan law) uses the 3 dB rule and can work in two ways. It can subtract 3dB in the center and slowly give back this cut as we pan side to side, or it can add 3dB as we move side to side. For headroom reasons it makes sense to use the cut version rather than the additive version. The important part is 3 dB is the adjusting factor.

The rate at which the left changes with the right is done so that the sound appears to move in a circle.

Reaper gives an additional Hybrid option for how this transition could occur.

Triangular pan law (also called linear pan law) makes no attempt to keep the sound at the same intensity as it is panned and so the middle will sound louder. This may be closer to how we hear in some respects but it is generally not the default in most sophisticated systems because we can alter the level with the fader so changing position shouldn't also change its level. We want each control to do only one thing. Several DAWs use the additive version frustratingly so use your ears! The amount of summation that can occur theoretically in a triangular pan law situation is 6 dB, if the waves are correlated. However in practice rooms usually don't provide ideal summations because of reflections in the room.

The other pan laws are essentially picking a transfer curve and a level to adjust by. 4.5 dB is the next most common but others exist. At the end of the day you should be aware of how your system handles panning. Most of the time it doesn't matter and the option to add the left to right does what we want generally but you may find giving the other way a shot to be more intuitive. Give both a try!

Equalization and Compression

Equalization is the act of adjusting the spectrums balance. If these changes are large they can effect the stereo balance and cause a change in pan to occur giving the sense that a sound may be louder in one ear over the other. A similar situation can happen with compression. It is for this reason most mastering compressors have a "link" option so that similar compression is applied to both channels. It is to preserve the stereo image.

Interaural Timing Differences (ITD)

The next major cue for horizontal sound detection is interaural timing differences or ITD. Since ITD relies on timing it is possible to use part of the cue with only a single ear. In this sense there are 2 "types" of ITD, those that rely on echos to come back to the ear and those that rely on the same sound hitting each ear at a different time.

Those that hit each ear at a different time are what people mean when they refer to ITD as this information is what allows us to locate something in space. Echos that come back to a single ear give us a sense of the size of a room but not necessarily the location of something and so are therefore not really a spacial cue. This perceptual line can get blurry so we need to understand the difference between the single ear hearing echos and two ear hearing a single wavefront and how that changes the information our brain receives.

Delays coming back to the ear play a large role in our perception of size. If a room is big there will be a long delay before any sound bounces off a surface and comes back to the ear after the direct sound. Echos carry size information and surface material information based on how long the sound waves take to come back after reflecting and how the sound wave has been spectrally changed. Someone with only a single ear would be able to detect the size of the room and what the sound may have reflected off of, such as brick vs carpet. What they would struggle to do is detect location. Horizontally locating a sound isn't possible with a single ear because you need a second ear to receive the sound and compare it against when the first ear got the sound. The brain is no pushover though, and can do some pretty amazing things with spectral cues to try and help out but horizontal location would be difficult to do.

ITD leads to a psychoacoustic phenomena called the Haas effect that we will talk about in a separate lesson, but it worth mentioning here.

Because ITD relies on timing differences to create stereo images it can potentially cause phase issues. Do not let this dissuade you from using it. ITD can do things IID cannot. Size is just one of its powers. With the right delays you can affects a sounds "width" as well. For now I recommend you just try it out. You can set this up by simply adding a delay between the left and right speaker. Reverbs feature a host of interesting options and other plugins specialize in the manipulation of IID and ITD cues such as "Stereoizer" by Nugen Audio (one of my favorites!).

It should now make sense that if a person had only 1 ear they would still be able to detect a rooms size, but not accurately detect horizontal location. Imagine you only have a single headphone in when listening to music. If something was happening on the other side of the mix you would have no idea! Rooms thankfully carry echos so acoustically single eared individuals get a little help from spectral cues.

I want to briefly mention mono reverb here. Mono reverb is the same echos and delays and filters on the sound in both channels. Stereo reverb has differences but a mono one does not. We get the sense of a room due to the difference in timing cues, echos, and filtering making a mono reverb sound very similar to a regular stereo reverb! Reverb is a principle of "space" and more so "size". Hence why something like a mono reverb can exist at all!

Spectral differences

The last piece of the puzzle is spectral cues. A sound may have its spectrum changed before it hits our ears. There are two layers to this:

- The ear applies it's own filtering on incoming sound.

- Surfaces the sound hits filter the sound before it hits our ears

This second clue gives us a clue to what the sound has come into contact with. Hence why rooms can sound "metallic" or "absorptive".

We can detect vertical location based on the spectral cues our ears shape gives us. The pinnas shape causes reflected sound to have specific delays which translates to a change in the spectrum. The reason for this is complex but it boils down to extremely short delays act like filters. Head movement can improve this system. We need to know what it would be like in at least 2 positions to work out what the change actually is. Once we have it worked out we get an idea of the vertical position of a source.

So if a person with just holes for ears came into the room they would not have a way to change the spectrum of the sound and thus wouldn't be able to detect vertical locations of sound via spectral cues.

Reflections of surfaces is another ball game. Your ears are not alone. You have eyes. These cues are combined to give you a greater sense of location. If you hear a lot of high frequencies but in front you is a carpeted room then there is a good chance the sound is probably coming from behind as nothing in front would reflect high frequencies that way. This kind of helping the ears and eyes do creates extremely strong images of where a sound source is. It can be used to great effect in movies and games.

Head Related Transfer Function (HRTF)

The head related transfer function is a special math function that describes how sound moves around a head. Each person has a different one but in gaming an average one is used. This allows for much better cues for 3d sound over stereo systems. Footsteps above or below a player in a game, or even behind! The HRTF is one of the tools that has allowed such astonishing precision. Part of the reason for this is the widespread use of headphones. Headphones are odd because the left and right signals go straight to the left and right ear with no interaction acoustically. This means if we simulate how they would act in a given space it will be preserved much better through the use of headphones because no acoustic interference will occur!

To support this series please consider donating via

paypalor joining the

patreon.